I've just built a first version of a system that offloads file downloads from the responsibility of Plone, while keeping authentication and authorization. With help from Apache and iw.fss, surprisingly few lines of code are needed to accomplish this.

Here comes first two bulleted summaries of what is going on. Look further down in this post for a longer description:

Workflow

- User accesses page in Plone, where he is offered to download a file

- When clicking the download link, a redirect is made to a sub domain, with the exact same path + filename

- The subdomain "steals" a cookie from the user and uses that to authenticate an xml-rpc call to check if user has the right to download the file

- If True, mod_python lets the request through, and file is downloaded through Apache

Technology

- Have Apache in front,

- use iw.fss to store files on the file system

- Serve the files out on a subdomain with Apache

- Use url rewrite to make authentication cookie or session cookie accessible to the sub domain

- Use mod_python as access handler for download sub domain

- The mod_python script has an xmlrpc client that can be configured with cookies

- The xmlrpc client is configured with cookies, so to Plone it is the user himself

- xmlrpc asks plone if dowload is ok by accessing proxy method of object of download desire

Longer description

Plone is not very good at serving out big files. A couple of concurrent huge downloads would take up a lot of Zope processes and threads. Here is a way of offloading the file downloading to other server processes (in this case Apache) with authentication and authorization intact.

The stuff needed is

- Apache with mod_python and mod_rewrite

- A custom transport agent for xml-rpc (included in this post)

- Plone

Let's look at how it is set up, going from the outside in:

Make the authentication or session cookie available to the download server

The user is browsing the web site. The first thing the user's browser hits is the Apache server, sending the request further to Plone. The user may or may not be logged in. The virtual server directive in Apache looks something like this:

<VirtualHost *:80>

ServerName server.topdomain

proxyPreserveHost on

RewriteEngine On

# Match the __ac cookie if present, and make a new cookie with same value, but that can be sent to sub domains

RewriteCond %{HTTP_COOKIE} __ac=([^;]+) [NC]

RewriteRule ^/(.*) http://localhost:6080/VirtualHostBase/http/%{HTTP_HOST}:80/site/VirtualHostRoot/$1 [co=__download__ac:%1:.server.topdomain,L,P]

RewriteRule ^/(.*) http://localhost:6080/VirtualHostBase/http/%{HTTP_HOST}:80/site/VirtualHostRoot/$1 [L,P]

</VirtualHost>

The RewriteRule looks pretty standard, it rewrites the request to fit the virtual host monster in Plone, and the Plone site with the id "site" is served out. However there is some extra stuff, particularly the line:

RewriteCond %{HTTP_COOKIE} __ac=([^;]+) [NC]

This line is a condition, that is triggered if the incoming request has a cookie by the name "__ac". The "__ac" cookie contains information that authenticates the user to the Plone site. If your site uses another cookie than "__ac" then put its name here instead. It does not matter if the cookie contains just a session id or the login information. The important thing is this part:

([^;]+)

...it matches the cookie value, and the brackets mean that Apache remembers this part of the pattern. Since this is the first (and actually only) pair of brackets in the pattern, it will be remembered as %1 (Apache's way of denoting back references from the environment or http headers is with a percentage sign, inside a url pattern it is the standard dollar sign though).

Now, let's look at the rewrite rule:

RewriteRule ^/(.*) http://localhost:6080/VirtualHostBase/http/%{HTTP_HOST}:80/site/VirtualHostRoot/$1 [co=__download__ac:%1:.server.topdomain,L,P]

The first part is standard. The square brackets at the end usually only contains control flow information (such as "stop here" or somesuch) but within the square brackets at the end here there is also an instruction to send a cookie with the response out:

co=__download__ac:%1:.server.topdomain

We define here a new cookie called __download__ac (it can be called anything), but the important thing here is the dot in front of the domain. This means that the cookie is available to the domain server.topdomain and all sub domains. In this way this cookie can be shared with sub domains.

The standard __ac cookie in Plone does not have the capability to be shared with sub domains. The __ac cookie is a bit insecure because it actually contains the user name and password in scrambled form, but for other reasons we wanted to keep it with this system.

Another way of making the cookie shared would be to write a PAS plugin for Plone that can be configured to send out the cookie with a dot-prefixed domain qualifier, but the above rewrite is hard to beat for brevity.

If one goes with the more secure session cookie, there seem to be a PAS plugin that already allows configuration with a a dot-prefixed domain, and in that case the rewrite above is not necessary. Well, either way you do it, at this point you should have cookie with authentication information that can be read by sub domains.

Apache config of download server

When the user click on the download link he will be redirected to a sub domain. Let's look at the Apache configuration for that:

<VirtualHost *:80>

ServerName download.server.topdomain

DocumentRoot /var/www/html

<Location />

#Extend the Python path to locate your callable object

PythonPath "sys.path+['/var/www/mod_python']"

# Make Apache aware that we want to use mod_python

AddHandler mod_python .py

PythonAccessHandler downloadauth

</Location>

</VirtualHost>

This looks pretty much like a standard virtual server directive in Apache, for serving out static files from the file system. The only embellishment being that a python script gets registered to be access handler for all requests. This means that the script is actually not serving out any content, it just sits as a gatekeeper and says yay or nay to if Apache should serve out the requested content to the user.

As per the configuration above, the script is defined above as having the name "downloadauth.py" and that it should live in the "/var/www/mod_python" directory on the server. Let's take a look at that script:

#!/usr/bin/env python

# -*-python-*-

#

from mod_python import apache, Cookie

import xmlrpclib

from cookiestransport import CookiesTransport

PLONE_URL = 'http://192.168.1.51:6080/site'

def accesshandler(req):

"""Return apache.OK if the authentication was successful,

apache.HTTP_UNHAUTORIZED otherwise.

"""

plone_local_uri = "/".join(req.uri.split("/")[:-1])

xmlrpc_server_url = PLONE_URL + plone_local_uri

# Get the __ac cookie

cookies = Cookie.get_cookies(req)

# apache rewrite adds this cookie as a sub domain friendly copy of "__ac"

cookie = cookies.get('__download__ac', None)

cookies_spec = []

if cookie is not None:

cookie_value = cookie.value

# Cookies values are delivered wrapped in quotes

cookie_value = cookie_value.replace('"','')

# We configure xmlrpc to use it as "__ac"

cookie_spec = ['__ac', cookie_value]

cookies_spec.append(cookie_spec)

server = xmlrpclib.Server(xmlrpc_server_url, transport=CookiesTransport(cookies=cookies_spec))

if server.can_i_download():

return apache.OK

else:

return apache.HTTP_UNAUTHORIZED

This script takes the cookie we defined, and sends an xml-rpc call to Plone. The xml-rpc client is configured with a special transport agent that can take cookies. In this way the xml-rpc client get authenticated as the user doing the download. Plone runs on Zope and Zope supports xml-rpc out of the box. All we need now is to access a method in Plone that has the same acess rights as the file the user wants to download.

If we assume the object in Plone holding the download looks something like this:

object

method: download (permission: 'View')

method: can_i_download (permission: 'View')

I.e. it has two methods,

- One that serves out the object from inside of Plone

- One that just returns True

The first method is never going to be used; we do not want Plone to serve out the file from within Plone. However we make a note of what permission that method has (usually "View"). We then make a method "can_i_download" (or whatever name you fancy) with the same permission. This method just returns True. It can look like this:

security.declareProtected(permissions.View,'can_i_download')

def can_i_download(self):

"""If user has permission to run this method, he

has permission to download the file"""

return True

The cookie aware transport agent for xmlrpc

The code for the cookie aware transport class largely taken from:

Roberto Rocco Angeloni » Blog Archive » Xmlrpclib with cookie aware transport

My version does away with the ability to receive cookies from the server

Adds capabilty to configure client with arbitrary cookies from code

# A module with a class that allows the xmlrpc client to be configured with a list of cookies

# For authentication with plone xml-rpc methods

# Based completely on code from Rooco Angeloni:

# http://www.roccoangeloni.it/wp/2008/06/13/xmlrpclib-with-cookie-aware-transport/

import xmlrpclib

class CookiesTransport(xmlrpclib.Transport):

"""A transport class for xmlrpclib, that can be configured with

a list of cookies and uses them in the xml-rpc request"""

def __init__(self, cookies=[]):

""" cookies parameter should be a list of two item lists/tuples [id,value]"""

if hasattr(xmlrpclib.Transport, '__init__'):

xmlrpclib.Transport.__init__(self)

self.cookies=cookies

def request(self, host, handler, request_body, verbose=0):

# issue XML-RPC request

h = self.make_connection(host)

if verbose:

h.set_debuglevel(1)

self.send_request(h, handler, request_body)

self.send_host(h, host)

fo = open('/tmp/modpythonlogger.txt','a')

fo.write('Value for cookiespec is:%s\n' % self.cookies)

fo.close()

for cookie_spec in self.cookies:

h.putheader("Cookie", "%s=%s" % (cookie_spec[0],cookie_spec[1]) )

self.send_user_agent(h)

self.send_content(h, request_body)

errcode, errmsg, headers = h.getreply()

if errcode != 200:

raise xmlrpclib.ProtocolError(

host + handler,

errcode, errmsg,

headers

)

self.verbose = verbose

try:

sock = h._conn.sock

except AttributeError:

sock = None

return self._parse_response(h.getfile(), sock)

iw.fss - care and feeding

iw.fss is a very slick product for external storage from Ingeniweb. Deciding what fields should be stored externally can be done with a ZCML file, you do not need to touch the code of the content types.

Making migration work in iw.fss

iw.fss has a control panel that allows you to migrate the already stored data in the fields, to the external storage. In our case a good number of field migrations failed. It turned out that at least in our system (which was prepopulated from an old legacy system) the data was sometimes stored in an object type, "OFS.Image.Pdata", that iw.fss migration could not handle (a bug ticket has been submitted to the iw.fss people about this).

The following monkey patch (used with collective.monkeypatcher), stored in a "patches.py" file fixed that problem:

import cgi

from ZPublisher.HTTPRequest import FileUpload

import cStringIO

from iw.fss.FileSystemStorage import FileUploadIterator

old__init__ = FileUploadIterator.__init__

def new__init__(self, file, streamsize=1<<16):

""" this is a file upload """

if not hasattr(file, 'read') and hasattr(file,'data'):

data = str(file) # see OFS.Image.Pdata

fs = cgi.FieldStorage()

fs.file = cStringIO.StringIO(data)

file = FileUpload(fs)

return old__init__(self, file, streamsize)

...with the following ZCML:

<configure

xmlns="http://namespaces.zope.org/zope"

xmlns:monkey="http://namespaces.plone.org/monkey"

i18n_domain="my.application">

<include package="collective.monkeypatcher" />

<monkey:patch

description="Patching FileUploadIterator to handle OFS.Image.Pdata objects"

class="iw.fss.FileSystemStorage.FileUploadIterator"

original="__init__"

replacement=".patches.new__init__"

/>

</configure>

Selecting storage strategy in iw.fss and how to make the url redirect

iw.fss can store the external files in different layouts, called "strategies". I chose "site2". "site2" mirrors the directory structure of Plone completely, and then adds the file in the innermost sub directory. Problem is, the path inside of Plone goes to the last sub directory, but Plone does not tack on the name of the file at the end of the url, i.e. if the file object in Plone has the url:

http://server.topdomain/afolder/another/folder/file_object

iw.fss will store it (with site2 layout) as:

/afolder/another/folder/file_object/filename

That "filename" can be anything. Thankfully iw.fss also stores a file next to the file with a fixed name "fss.cfg". This stores the name of the other file (the one we want to serve out).

I wrote a tool that can be configured with info on where on the file system iw.fss stores its data, and what the url is of the download server. Let's say the tools is called "tool_that_stores_fss_info". If you do not want to write a tool, a config sheet in portal_properties will do too.

In your file object, write a method that goes something like this:

security.declarePublic('redirectToExternalFile')

def redirectToExternalFile(self):

"""Redirects to external download url"""

sc_tool = getToolByName(self, 'tool_that_stores_fss_info')

portal_url = getToolByName(self, 'portal_url')()

local_url = self.absolute_url()[len(portal_url):] # Extract local part of url

file_path_to_download = sc_tool.fs_path_to_download_root + local_url

config = ConfigParser.ConfigParser()

config.read(file_path_to_download + '/fss.cfg')

file_name = config.get('FILENAME', 'file')

url_to_download = sc_tool.url_to_download_root + local_url + "/" + file_name

#return url_to_download

self.REQUEST.response.redirect(url_to_download)

Buildout configuration of iw.fss

Below is an fss configuration that works for a Plone site named "site" stored in the Zope root, using "site2" as storage layout, and the external files get stored inside the var directory of the buildout.

[fss]

recipe = iw.recipe.fss

zope-instances =

${instance:location}

storages =

# The first is always generic

global /

site /site site2 ${buildout:directory}/var/fss_storage_site

Other location of the external files

In the above case the files gets stored in the var directory inside of the Plone buildout. It may be a better idea to store it on the server in a directory structure with more permanence, like "/var/www/html" as is suggested in the Apache configuration for the dowload server earlier in this post. The var directory in a buildout does not get overwritten on invocations of buildout, but it is still in the buildout directory structure.

Make sure however you have the iw.fss storage on the same volume as the Plone buildout:

Many unixish systems have different directories (/tmp, /home, /var and so on) on different mounted volumes. Python's os.rename cannot handle this and therefore the code in iw.fss that uses os.rename cannot handle the storage being on a different mounted volumes (say, on "/var/www/html", when the Plone site is a buildout in the "/home" hierarchy). shutil.move may be an alternative to os.rename. (a bug ticket has been submitted to the iw.fss people about this).

Some possible improvements and simplifications

What changes could be made to make this more of a solution ready out-of-the box, to be deployed on different servers?

Getting the cookie to the sub domain

- The rewrite in Apache could be simplified, or

- The cookie handler in Plone could check what domain it is serving the cookie in, and tack on a dot. This behavior could be switched on and off with a checkbox in the ZMI. In this way no Apache rewrite would be necessary at all.

mod_python contacting Plone

- Assuming Plone runs on port 8080 and with the same ip number as mod_python could be a default in the mod_python script

Constructing the right url to redirect to

- The redirect code could be factored out into a view (if they work with xml-rpc), or some other mechanism, so that a simple ZCML configuration would connect a downloadable field in a content type with the redirect code

- It is probably possible to ask iw.fss where its file system storage is, in that way no separate setting would be needed for this in a tool or property sheet

- The default domain to redirect to could be the one Plone is on, with "download." tacked on in front. Together with the preceding suggestion, no settings would need to be stored in Plone

Replacing xml-rpc

- Instead of xml-rpc, maybe one could simply use an authenticated HEAD request to the file in Plone? Then no custom authentication method would be needed.

Use mod-xsendfile

I have since I did this work noticed Jazkarta has commissioned a creation of a file upload/download optimization:

One of the parts of this is Apache's mod_xsendfile module. It seems that its purpose is to watch the response headers from a proxied server (e.g. Zope), and if a x-sendfile header is found, it cancels the response and serves out a file via apache, located on the file system according to the value of the x-sendfile header.

Our current implementation is working, but this would simplify a bit, not needing a separate domain or any cookie rewrites or xml-rpc calls, on the other hand there would be a new module to install and test:

http://tn123.ath.cx/mod_xsendfile/

If I understand correctly the new BLOB support in Plone 4 is supposed to be easy on the CPU with file iterators. This ought to mean that one can have a good number of threads per ZEO client, and these should be able to serve quite concurrently (i.e.. non-blocking).

Change the architecture

Tramline (PloneTramline) uses Apache filters to intercept request and responses in Apache. In this way it can monitor all request and responses and insert a file on the way out, and take care of a file on the way in.

It would be cool if were possible to configure Apache in such a way that you can choose proxying based on the response headers (from the first proxy you try). If those response headers match some criterion (such has there being an "x-dowload-from-somewhere-else" header), Apache would simply switch to another server it proxies. I do not think it can be done, but this page talks about a possible implementation:

Smart filter

In fact the above mentioned mod_xsendfile is a variation on this theme.

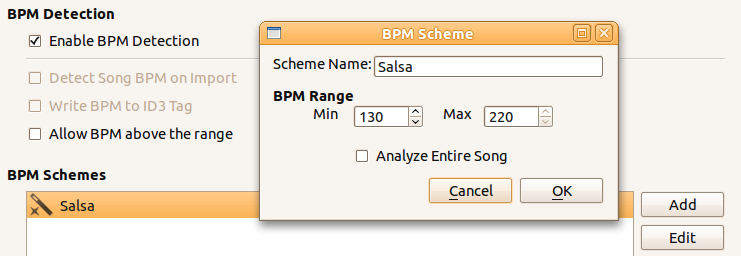

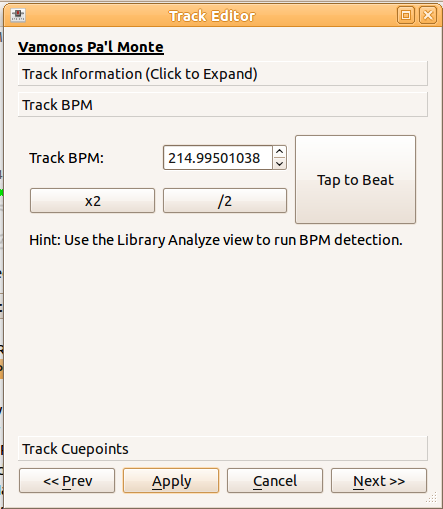

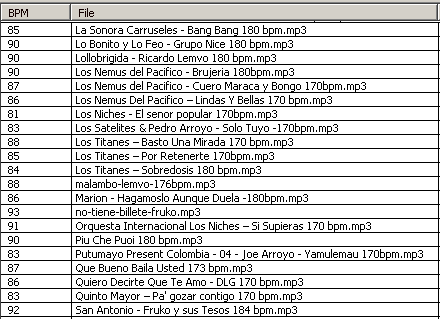

Soundtouch (logo at left) is used by the command line application soundstretch, and by the GUI applications

Soundtouch (logo at left) is used by the command line application soundstretch, and by the GUI applications  Mixxx

Mixxx

Bpmdj

Bpmdj Banshee

Banshee

PistonSoft BPM Detector

PistonSoft BPM Detector

Mixmeister BPM analyzer

Mixmeister BPM analyzer