Counting bpm (beats per minute) on Linux

Last updated: November 25, 2010

soundstretch file.wav -bpmIt detects about 50% of my salsa music correctly (sample size: about 30 songs). I have written a wrapper around soundstretch for bpm assessment of mp3 files. It is here.

There is also bpmcount, part of BpmDj. Beware that it runs about 100 times slower than soundstretch as in 6 minutes compared to 3.6 seconds: I have only tried one song so far in bpmcount, and it got that wrong as 180 bpm when it is around 208 bpm

For better performance than soundstretch (~80%) and automatic bpm detection with a GUI, use the free Abyssmedia BPM Counter, and run it under Wine. Piston bpm counter also runs under Wine, and writes BPM tags, but performs worse than Abyssmedia and Mixmeister.

For manual beat counting, use Salsa City Beat Counter, or the beat counter in Banshee, which will also give you a gui to the soundstretch code.

Bpm counting software on Linux

What software can you use to count beats per minute in your songs using Linux? Although there is a slew of software on Windows, the selection is smaller on Linux, and if you look closer at the Linux offerings you will find that they often use the same bpm counting code.

The two sources of bpm counting code that I have found are the soundtouch library by Olli Parviainen and the bpmcount from BpmDj by Werner Van Belle. Van Belle has also written a paper outlining the algorithm used in bpmcount.

Soundtouch (logo at left) is used by the command line application soundstretch, and by the GUI applications Banshee (in the shape of a gstreamer plugin) and Mixxx (as a library).

Soundtouch (logo at left) is used by the command line application soundstretch, and by the GUI applications Banshee (in the shape of a gstreamer plugin) and Mixxx (as a library).

Bpmcount can be used a standalone command line application and also from inside of BpmDj.

I have uses salsa music to test the counters, and there are many rhythms in salsa songs for a poor piece of software to latch on to. Salsa also lacks deep bass (a sub woofer is useless) which may make the task more difficult. Salsa typically ranges from 165 bpm to 210 bpm.

Bars and beats

I you have looked at the Salsa City Beat Counter you may have noticed that it further down on the page says "bar" not "beat". Let's sort out the difference between bars per minute and beats per minute, and why at all you want to know any of those for a piece of music. For most music there will be 4 beats to a bar, so a song which has 160 bpm (beats per minute) has 40 bpm( ehrm, bars per minute). In this text bpm will henceforth always mean beats per minute.

The reason you want to know the bpm, well since you are reading this text you probably already precisely know why you want to know it, but generally speaking, it is useful in connection with dancing. If you are DJ'ing or practicing dancing, it is useful to play songs that fit in a certain range of dance speed. If your software includes pitch shift and time stretching, you can use also use bpms to sync up songs.

Command line applications

Soundtouch is used by the command line application soundstretch, and bpmcount from BpmDj can be used a standalone command line application. Both operate on Wav files rather than mp3 files. bpmcount is in my tests approximately 100 times slower than soundstretch, meaning a song that takes 3.6 seconds to classify with soundtouch takes a full six minutes with bpmcount. I have only tried one song so far in bpmcount, and it got that wrong as 180 bpm when it is around 208 bpm. Soundstretch I have tried on about 30 files.

Soundstretch has a tendency to output the bpm count as a quarter or half of the real count. It got the 208 bpm file as 52 bpm. I have written a wrapper around it to compensate for this and to make it operate on mp3 files. It is here.

Mixxx

Mixxx

Mixxx, is a piece of Dj software that has beat counting built in. It has a reasonable simple interface for analyzing files, once you find it, but the BPM is not written to disk. Mixxx uses the soundtouch library.

There is an analyze library view where you can select and analyze files, including getting the bpm.

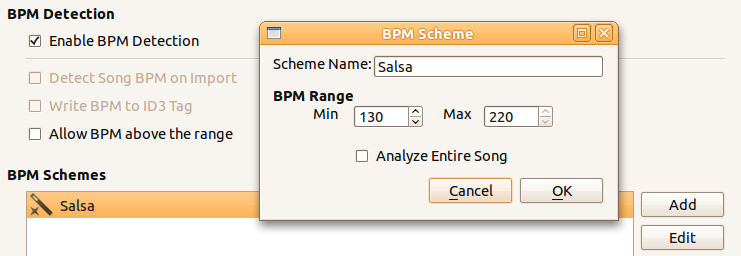

You can register profiles, giving sane max and min bpm values for your music genres

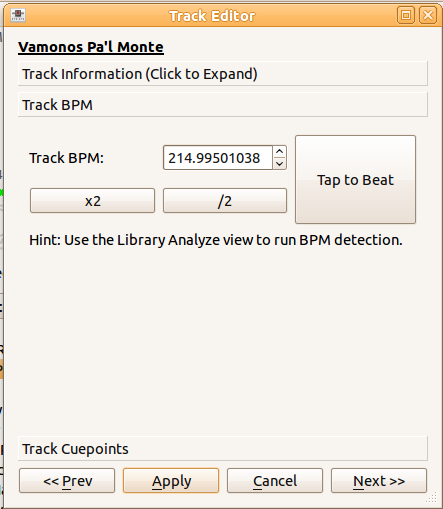

But alas, you can't get Mixxx to write the bpm to disk, despite the very nice properties dialog, with doubling and halving, tapping the beat and a next button. Problem is "Apply" does not write the bpm to disk

Bpmdj

Bpmdj

There is a Linux native product calledd BpmDj, but I did not get it to compile in the time I was willing to spend with (and the pre compiled binary did not work on Ubuntu 9.10 or 10.10 for me). However it contains a command line app called bpmcount and it worked just fine.

Banshee

Banshee

Banshee is a music player quite similar to Rhythmbox (but written in the mono framework). It is the only music player on Linux so far that I have seen taking bpm seriously: Bpm counting and tagging is well integrated in the GUI. It uses the soundtouch library in the shape of a gstreamer plugin and performance can be expected to be identical to soundstretch because of this, but with rounding errors: Soundtouch has a tendency to output a bpm rate a quarter or a half of the correct rate. Multiplying by e.g. 4 rectifies this, but since Banshee reports the bpm count as an integer, you do lose a bit of precision.

Running Windows software under wine

One of the most recommended automatic beat counters for Windows is MixMeister, but it does not run well under Wine on Linux according to the database at winehq. I have tried it, it installs and runs, but no beat counting takes place

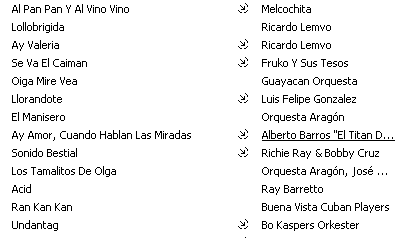

However Abyssmedia BPM Counter for Windows runs just fine under Wine, and does a pretty good job. With BPM counter you will have to read the bpm counts off the screen; there is no facility for writing back to the mp3 files or to a separate file. It detected 80% of my salsa music correctly, and there are many rhythms in salsa songs for a poor piece of software to latch on to. It did more or less consistently though report half the bpm of each song. I got about 70% of the songs classified at half speed. A simple reality check fixed that though: Salsa music ranges from 150 bpm to 210 bpm, so 96 bpm classification is actually 192 bpm. The same adjustments were made for soundstretch.

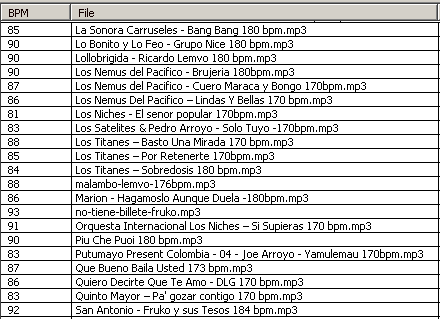

In the second column "File" is appended the correct verified (give or take a few beats) bpm count for the song.

Abyssmedia BPM Counter does a pretty good job, as long as you double the bpm. Petty it does not write bpms to disk.

Trying to find something that writes to tags directly, I also tried BPM ProScan running in a win2k installation under vmware. It did not perform well on Salsa songs. As an example Lollobrigida by Ricardo Lemvo was classified as having 120 bpm. It has 180 bpm in the first half and a bit over 160 bpm in the second half. Other bpm counters sometimes get it at 90 bpm, which is understandable, but 120bpm is right out.

PistonSoft BPM Detector

PistonSoft BPM Detector

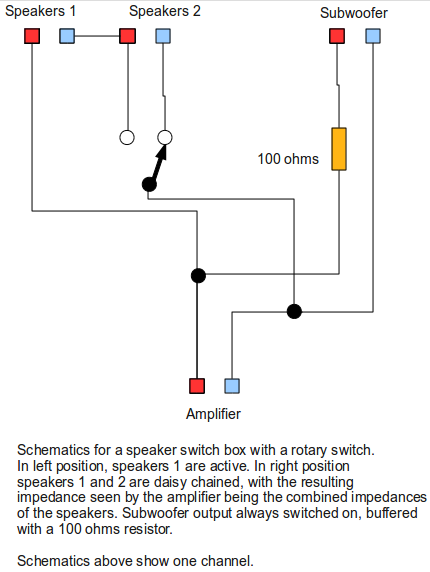

PistonSoft BPM Detector installs under Wine and runs. Below a screen shot that compares the actual bpm count of files, with the classification performance of PistonSoft BPM counter and Mixmeister bpm analyzer.

In the first column "Filename" is appended the correct verified (give or take a few beats) bpm count for the song. In the next column "BPM", is the bpm count provided by PistonSoft BPM counter running under Wine, and the third column "Tag BPM" shows the bpm tag of the files as written by Mixmeister bpm analyzer running under Windows Vista:

It is pretty clear that MixMeister BPM Analyzer outperforms PistonSoft BPM Detector on salsa music, although you often need to double the bpm classification for MixMeister BPM Analyzer.

However PistonSoft gets points for running under my Wine installation, which MixMeister does not.

Mixmeister BPM analyzer

Mixmeister BPM analyzer

Getting back to Mixmeister bpm analyzer, I finally gave in and installed it on a Windows Vista machine. It dumps the bpm data to a tab delimited data file, with bpm count as the last item in each row. I ran it on the same files as I had classified with BPM counter (and adjusted manually). In these I had encoded the bpm count right into the file name, and since the file names were included in the data file put out by mixmeister, it made for easy comparison of the bpm classifications of Mixmeister and Abyssmedia, and it turned out that they were often within one bpm. Reading more on the Internet it seems like Mixmeister does write to the bpm ID3v2 tag! It just doesn't tell you about it. Looking into one of the files I tested with in a hex editor there is indeed a TBPM field. It may be worth firing up Windows just for Mixmeister then, as long as it does not overwrite an existing BPM tag.

I am right now classifying 300+ mp3s with it, and I notice that it almost always suggest half the bpm of the actual bpm of the song. Looking through the numbers, this is caused by it never assigning anything above 165 bpm to a song. While writing my wrapper around soundstretch I applied a window of sanity for bpm classifications, and it seems that Mixmeister does the same: It fits everything in between 165 bpm and probably 83 bpm at the low end. Since salsa pretty much starts at 165 bpm and goes upwards from there, it explains the consistent halving of the bpm reported as compared to actual bpm.

Manual beat counters

How do I know that it was correct? I double checked it (well not all songs, but many) with the javascript based manual beat counter Beat Counter, which runs in a browser, and hence works just fine under Linux. With Beat Counter, you start it with a mouse click and tap the beat with any key on the keyboard (do not use space bar on Linux though, it will scroll the page).

Bear in mind that the mouse click is counted as one tap, and if you casually start it with a mouse click and then wait a few secs before tapping, it will assume there was a super long beat between the mouse click and the first tap. This will result in the average bpm be wrong and slowly rise but never reach the true bpm of the song.

Update: Here is a better beat counter: Salsa City bar counter, a bit easier to use.

BPM Counter is a fast and accurate beats per minute detector for MP3 music.

BPM Counter - detect BPM of any song.

A manual Beats per Minute (BPM) counter for DJs. Counts the beats-per-minute of a song by tapping a key or the mouse to the beat of a song. Simply click on the page to start the time then tap any key to the beat.

The JavaScript Source: Math Related: Beat Counter