Keepass is originally a Windows based password manager that has grown into an ecosystem of several Keepass-compatible password managers and also plugins based on the kdbx file formats, and some protocols.

Most of it, possibly all, is open source. Often you want to use the same credentials on several of your devices and hence you need to be able to sync.

Here I am testing KeepassXC (on Ubuntu but should be the same for most Linux distros, also available for Windows and MacOS) and Keepass2Android.

KeepassXC is a community rewrite of KeepassX, and Keepass2Android is recommended for Android on the site of KeepassXC. Both are open source.

In initial tests so far, the syncing seems to work. Update: I just tested changing memory hardness of Argon2 key derivation on the Android side, and it propagated fine to Linux. I then tested to change number of rounds of key derivation on the Linux side, and that propagated fine to Android. Impressive.

Syncing in KeepassXC and Keepass2Android

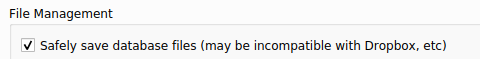

KeepassXC doesn't have any syncing capabilities of its own. It relies on external solutions doing that such as sshfs, DropBox and so on. I understand that they do not want to clutter the app with syncing code, but my guess is that there will be corner cases depending on the sync technology chosen. KeepassXC itself warns about it:

Maybe the wording is unfortunate, but it seems in the screenshot above that you have to choose between compatible or safe for "Dropbox, etc"

Keepass2Android on the other hand has built in support for sftp, WebDAV and a number of magic folder solutions (DropBox, OneDrive et al.)

One other option is to use Syncthing to sync the databases. However in my experience Syncthing has corner cases of its own. Regardless of which technology you choose you should also make backups; synchronization is not backup and sync can get it wrong and wipe your stuff.

Here are some things to watch out for with KeepassXC and Keepass2Android:

KeepassXC on Ubuntu (and on other Linuxes)

Don't use snap (for most sync scenarios)

The snap-packaged version doesn't connect well to externally mounted filesystems. So if you want to use sshfs or any other such tech for syncing, it's better to use e.g. the AppImage version. This is actually mentioned in their FAQ;

Due to Snap's isolation and security settings, you cannot access any files outside your home directory.

That also means in my experience filesystems mounted inside of the user's home directory.

https://keepassxc.org/docs/#faq-appsnap-homedir

How to use sshfs

For automounting an sshfs volume that tolerates IP number changes and connection problems, on a Linux running systemd, check my blogpost FSTab: How to mount an sshfs volume that tolerates ip number changes and connection errors

Keepass2Android

Sftp rather than WebDAV

Use sftp rather than WebDAV (I'd say), because the sftp option allows a key file instead of a username/password combo, which the WebDAV option has as the only option.

Do not bother with specifying path

Specifying path in the sftp dialog seems tricky. In my experience better to leave it at "/" and navigate to the file in the next dialog.

Keystretching (sometimes called "key derivation") and encryption

Both KeepassXC and Keepass2Android have state of the art keystretching (key derivation) and encryption. The keystretching can be selected to be Argon2, which is almost too new for trusting completely (I would have liked to see scrypt in there too as an option). Still it's a bit of fresh air compared to the popular PBKDF2, which is not memory hard and hence ought to be vulnerable to attacks from ASICs.

For the symmetric encryption both AES and ChaCha20 are available, which ciphers underpin the Internet.

How good are KeepassXC and Keepass2Android at syncing with each other?

Well, that remains to be seen! Credentials seem to propagate fine so far. As written further up, I tested changing memory hardness of Argon2 key derivation on the Android side, and it propagated fine to Linux. I then tested to change number of rounds of key derivation on the Linux side, and that propagated fine to Android.

But having that set up, there is now a need for a backup solution. I'm actually thinking of using git for this, but have not made up my mind yet. But it makes sense to use well known and widely used software components. A malicious attacker that gets commit access to the remote git repository could rewrite the git history and hence delete old backups though.

it seems possible to actually make a Git repository where you cannot rewrite history. You need to do two things:

-

Only allow fast forwards for applying patches (I guess it is applying patches here? Right guys?)

-

Deny all deletes

git config --system receive.denyNonFastforwards true

and

$ git config --system receive.denyDeletes true

Got the info from here: https://stackoverflow.com/questions/2085871/strategy-for-preventing-or-catching-git-history-rewrite